The SEMANTIC WEB.

The Semantic Web = a Web with a meaning.

|

"If HTML and the Web made all the online documents look like one huge

book, RDF, schema, and inference languages will make all the data in the world look like one huge database"

Weaving the Web

by Tim Berners-Lee with Mark Fischetti.

The original design and ultimate destiny of the WWW, by its inventor.

" Now, miraculously, we have the Web. For the documents in our lives, everything is simple

and smooth.

But for data, we are still pre-Web. "

Tim Berners-Lee,

Business Model for the Semantic Web. |

What Is The Semantic Web?

The word semantic stands for the meaning of. The semantic of something is the meaning of something.

The Semantic Web is a web that is able to describe things in a way that computers can understand.

Statements are built with syntax rules. The syntax of a language defines the rules for building the language statements.

But how can syntax become semantic?

This is what the Semantic Web is all about. Describing things in a way that computers applications can understand.

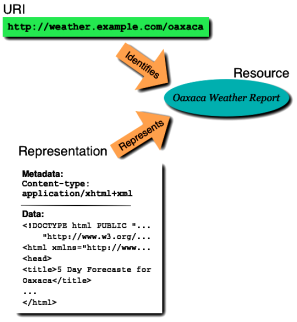

The Semantic Web is not about links between web pages.

The Semantic Web describes the relationships between things

(like A is a part of B and Y is a member of Z) and the

properties of things (like size, weight, age, and price.....)

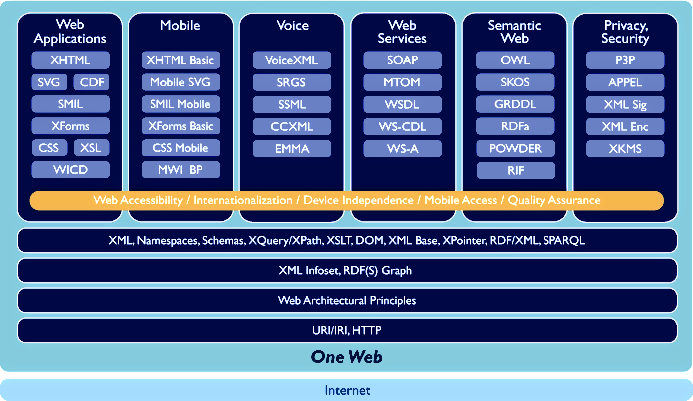

The principal technologies of the Semantic Web fit into a set of

layered specifications.

The current components are:

- the " Resource Description Framework (RDF) " Core Model,

- the " RDF Vocabulary Description Language 1.0: RDF Schema " language and

- the "

Web Ontology language (OWL) ".

Building on these core components is a standardized query language for RDF enabling the

‘joining’ of decentralized collections of RDF data.

RDF query language and data access protocol.

SPARQL Query Language for RDF. SPARQL Protocol for RDF. SPARQL Query Results XML Format.

RDF and

OWL

are Semantic Web standards that provide a framework for

asset management, enterprise integration and the sharing and reuse of data on the Web.

These languages all build on the foundation of

URIs,

XML, and

XML namespaces.

The goal of the Semantic Web initiative is as broad as that of the Web:

to create a universal medium for the exchange of data.

It is envisaged to

• smoothly interconnect personal information management,

• enterprise application integration, and

• the global sharing of commercial, scientific and cultural data.

Facilities to put machine-understandable data on the Web are quickly becoming a high priority for many organizations,

individuals and communities.

The Web can reach its full potential only if it becomes a place where data can be shared and processed by automated

tools as well as by people.

For the Web to scale, tomorrow's programs

must be able to share and process data even when these programs have been designed totally independently.

These standard formats for data sharing span application,

enterprise, and community boundaries -

all of these different types of 'user' can share the same information,

even if they don't share the same software.

This announcement marked the emergence of the Semantic Web as a broad-based,

commercial-grade platform for data on the Web.

The deployment of these standards in commercial products and services signals the transition of Semantic Web technology

from

what was largely a research and advanced development project over the last five years,

to more practical technology deployed in mass market tools

that enables more flexible access to structured data on the Web.

W3C - Semantic Web - Activity Statement.

The Semantic Web is NOT a very fast growing technology.

" For the processing of the knowledge available in the Semantic Web are inference engine necessary. "

Inference engines deduce new knowledge from already specified knowledge.

Two different approaches are applicable here:

(1)logic based inference engines and (2) specialized algorithms (Problem Solving Methods).

The World Wide Web is presently a very large collection of mainly statistical documents, a large database without logic.

More and more businesses are completed over the Internet.

One of the most frequent problems is not only the availability of information,

(then the Web-presence alone produced no marketing success yet),

but rather also the intelligent settlement of the exchange of information,

(B2B; B2C; Supply Chain Management, CRM,...etc.) electronic managements of strategic processes.

The present extension of the WWW is going mainly through meaningless associations.

Good businesses intelligence for Internet businesses or the generation of aim orientated

offers for the E-COMMERCE, next to the simple needed information; extraction and interpretation of a problem are the technologies,

which are available today.

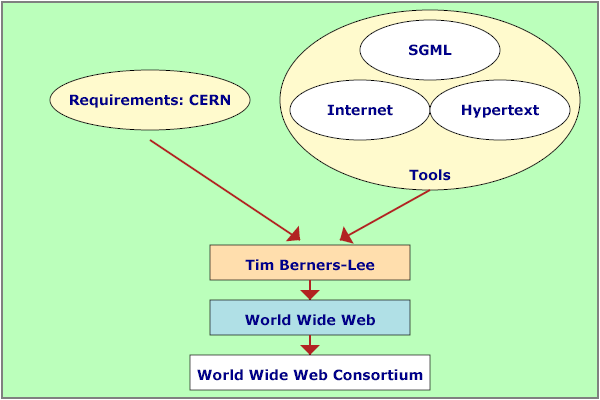

Tim Berners-Lee,

the inventor of the WWW, established the vision of a "Semantic Web",

intelligent use of the WWW for the transmission and the exchange of contents that are intelligible also for machines and people.

The Semantic Web support automatic services, being based on the semantic descriptions.

Knowledge can be mediated only with the help of semantic

The lecture comprises the most important drafts of the semantic Web, introduce the modelling - and analysis

technologies as well and it gives an overview about the present developments